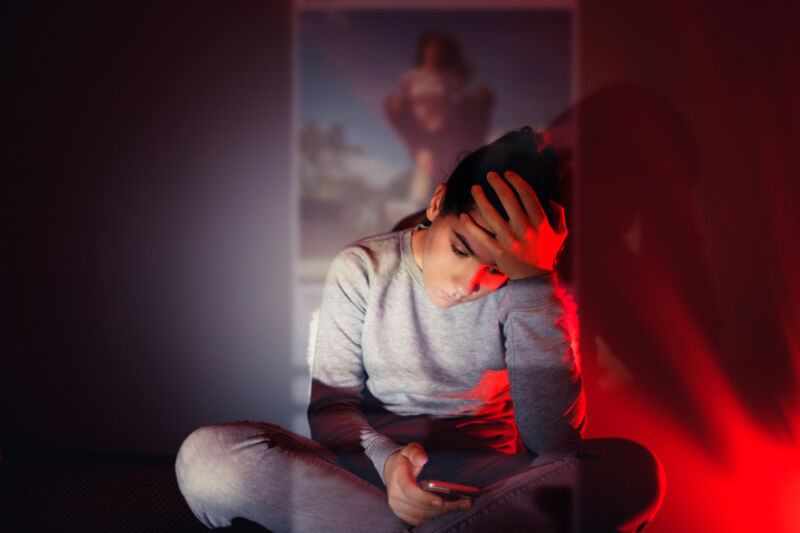

Enlarge (credit: Georgijevic | E+)

The US seems to be getting serious about criminalizing deepfake pornography after teen boys at a New Jersey high school used AI image generators to create and share non-consensual fake nude images of female classmates last October.

On Tuesday, Rep. Joseph Morelle (D-NY) announced that he has re-introduced the “Preventing Deepfakes of Intimate Images Act,” which seeks to "prohibit the non-consensual disclosure of digitally altered intimate images." Under the proposed law, anyone sharing deepfake pornography without an individual's consent risks damages that could go as high as $150,000 and imprisonment of up to 10 years if sharing the images facilitates violence or impacts the proceedings of a government agency.

The hope is that steep penalties will deter companies and individuals from allowing the disturbing images to be spread. It creates a criminal offense for sharing deepfake pornography "with the intent to harass, annoy, threaten, alarm, or cause substantial harm to the finances or reputation of the depicted individual" or with "reckless disregard" or "actual knowledge" that images will harm the individual depicted. It also provides a path for victims to sue offenders in civil court.

Read 13 remaining paragraphs | Comments

Ars Technica - All contentContinue reading/original-link]